How do you improve your website performance? One obvious solution would be to upgrade your hosting account. Your site will have more hardware resources to play with, so it can process more requests at once and deliver the data more quickly.

That’s what people assume, anyway. The reality is, as always, different.

Modern websites are complex systems with numerous components that must work in unison. If one of them is acting up – the entire project will slow to a crawl, no matter how powerful the hosting server is. And one of the most important components is the database.

Today, we’ll take an in-depth look into how databases work, what slows them down, and how you can optimize them.

The Importance of Database Performance Optimization

In the early days of the World Wide Web, websites used to consist almost exclusively of HTML and media files. After a user entered the site’s URL, the web server would deliver the required files for the browser to render them into visible text and pictures. Every visitor would see the same content, and all they could do with it was click hyperlinks to browse different pages and images.

Things are a whole lot different now.

We often have multiple scripts and applications that run simultaneously to turn even the simplest page into an interactive website. Such solutions can enable simple features like comments under blog posts, but they can also be used for more complicated things like payments, orders, subscriptions, etc. All this would be impossible without a database.

Every time a user interacts with your site, your web application sends requests (or queries) to your database, asking it to either store a new data record or serve an existing one. The speed with which these queries are processed is critical for the site’s overall performance.

But how exactly do you tell whether your database is quick or not?

With so many variables, answering this question is fairly tricky. There are two main types of databases and dozens of different database management systems (DBMSs), all with their individual data handling mechanisms.

Each has its own speed monitoring and measurement tools that can help you track your performance. Nevertheless, the aspects of the database’s operation that fall under scrutiny are usually the same.

Here they are:

- Response time – the response time measures how long the database takes to process a query and return the results to the user. You could argue that it’s not the most accurate metric out there. No two queries are the same, and the average response times you get at any given time depend on many different factors.

However, this is the metric that users notice the most, and it’s definitely something you want to pay attention to. If the average response time increases, the site’s overall performance deteriorates, so it’s time to do something about it.

- Query performance – the query’s structure plays an enormous part in how long the database takes to deliver the required data. By monitoring the performance of individual queries, database administrators can single out slow ones and take the necessary precautions to optimize them.

- Query throughput – throughput refers to the volume of information a database can process at once. On the one hand, admins must know what this volume is in order to scale the hosting environment accordingly. However, they must also be aware of how the data is delivered.

It could be processed in a small number of large queries, or it could be the result of many smaller ones. This information helps admins draw a plan and determine what optimization techniques they’ll use.

- Database events – a database event is an event that revolves around storing, modifying, or deleting data in the database. Both users and applications can cause a db event.

For example, a visitor setting up a new account or an application automatically cleaning error logs. Too many events at one time could slow the database down, especially if they happen during busy hours. It’s the job of the system administrator to avoid such scenarios.

- Open database connections – the database connection facilitates the communication between users of software applications on one side and the database on the other. The more open connections you have at any given point, the more bandwidth you use. However, it’s the new connection requests that have an even bigger impact on both the performance and resource usage.

That’s why the concept of connection pooling is gathering more and more speed. A connection pool is a cache of open database connections that can be shared among users and software applications. With it, visitors won’t need to send new requests all the time and will instead use one of the already opened connections. This reduces latency and improves the overall performance.

- Database errors – a database error often results in a complete website breakdown. The pages become inaccessible, and all visitors see is a white background and an error message that may or may not make sense to the average Joe.

The user will likely click away and never return to your site again, which is not ideal for your business. That’s why it’s crucial to identify and resolve any database errors as quickly as possible.

However, before you can monitor and optimize your database, you need to know what sort of technology it uses.

Choosing the Right Database and DBMS

Choosing the right database management system is crucial not just for your website performance but for the future of the entire project. Before we show you how to do it, however, we need to clarify a few concepts.

First, we need to discuss the two main types of databases:

- Relational databases – relational databases are also known as SQL databases because they work with the Structured Query Language. Every bit of information you store in such a format is placed in a table. Your DBMS builds relations between the different tables so data can be retrieved and served more quickly and efficiently.

Relational databases take a more simplistic approach to data storage. The SQL syntax is easy to understand, and the structure eliminates the chance of storing duplicate entries.

Conversely, relational databases are designed to work on a single machine, so they can’t be scaled horizontally. This could be an issue if your project grows to the point where a single server is no longer powerful enough to support it.

- Non-relational databases – non-relational databases are also called NoSQL (as in Not Only SQL). NoSQL databases use several storage methods for saving information. Some keep it in files, some use columns and tables (with no relations between them), and some rely on complicated networks of nodes.

Non-relational databases can store a more diverse range of data structures, and because they can be horizontally scaled across multiple servers – they can also work great for large-scale websites with enormous volumes of data. However, for a regular website where you’ll handle standardized data sets, using a non-relational database could be an unnecessary complication.

Whether you’ll use a relational or a non-relational database depends mainly on the type of site you’re trying to build. Most content management systems and website-building applications like WordPress, Joomla, Drupal, Magento, etc., use SQL databases, and there are a couple of reasons for this.

First, many of these platforms can trace their roots back to the early 2000s when the management of non-relational databases was even more complicated than it is today. The second and more crucial reason is that the data generated by most web applications tends to be predictable. There are limited data types, and the structure rarely changes.

Ultimately, the type of database you use is determined by your project’s requirements. The same is also true for the DBMS. The DBMS sits between the user or application and the database. It’s responsible for handling the queries between the client and the server.

There are plenty of options out there. If you use an SQL database, you’ll choose between some of the following:

- MySQL

- MariaDB

- Microsoft SQL Server

- PostgreSQL

- SQLite

The choice is more limited for NoSQL, with the most popular options being MongoDB and Redis. Certain proprietary products like the Oracle DBMS work with both relational and non-relational databases.

Once again, the primary consideration is to see which DBMSs are supported by the software you’ll use to build your site. Some apps are designed to work with only one particular database technology, while others support multiple options.

Which one you choose depends on many factors, including the size of your project, the potential for growth, the tools you use to build it, and your budget. Consider them all individually and drop the unsuitable options until you’re down to a few candidates.

With the choice narrowed down, you’ll need to compare the different DBMSs and their features side-by-side. That’s when you’ll notice that certain differences between the individual systems may directly affect your database performance.

For example, if you compare MySQL and MariaDB head-to-head, you’ll find that the latter has more storage engine and speed optimization options. If performance is a major consideration, it should be obvious where the scales are tipped.

In the end, choosing the right DBMS comes down to an in-depth analysis and a careful arrangement of the priorities.

Most managed hosting accounts come with a stack of technologies that includes a database management system. Because most websites work with relational databases, the typical setup usually has an SQL DBMS.

Overall, relational databases are much more popular, so the optimization techniques we’ll show you today revolve primarily around them.

Database Indexing and Query Optimization

The faster your database queries, the quicker the website. The speed of query execution depends on numerous different factors, including the server’s performance, the number of simultaneous connections, the buffer and memory caching configuration, etc.

However, one of the simplest ways of ensuring data is delivered quickly is to organize it better. And if you use a relational database, one of the easiest things to do is to implement an indexing strategy. Let’s look at a hypothetical example to demonstrate how indexing works.

Imagine that you have a database table with 230,000 rows – roughly the number of entries in a modern English dictionary. The first column contains IDs, the second for words or phrases, and the third for their respective definitions. The entries are NOT arranged alphabetically.

A user wants to find the definition of the word “database,” located on row number 200,000. What the DBMS has to do is load the table into memory, start from the very top, and run through every single row until it finds the correct one. In other words, to execute a single query, your database needs to read 199,999 rows. This is hardly the most efficient approach, and it’s the reason why indexes exist.

An index is a separate data structure within the database. It contains a copy of one or more columns from a database table, as well as pointers to where the relevant data is.

In our case, we have an index with a copy of the column containing the words and pointers telling the DBMS where each entry is located on the main table. Because this is a separate data structure, we can arrange the words and phrases alphabetically without causing any other damage (like messing up the IDs, for example). The benefits of such an index are two-fold.

First, the DBMS uses the alphabetical order to quickly locate the word on the index. Instead of going through every one of the 230,000 words and phrases, it’ll check the 115,000th entry (the halfway point) in the index and figure out whether the word “database” is before or after it alphabetically. We’ll assume that under the 115,000th entry, we have the word “microscope,” so “database” is definitely above it.

“Microscope,” along with all the entries below it, will be eliminated from the search. The DBMS will repeat the same process several times until it locates the “database” entry within the index. This is much more efficient than reading through every row in a database.

Then, having found the entry in the index, the database has the coordinates of the data block that contains the relevant data. As a result, it can retrieve the definition without loading the entire database table into memory. This makes it both faster and more efficient.

Any downsides? Well, being a separate structure, an index inevitably takes up some additional storage. Furthermore, you need to carefully pick and combine the columns you’ll add to your indexes. Ideally, you want to index data that is frequently searched and used.

However, the enormous performance benefits far outweigh any disadvantages.

But how do you create a database index?

One option would be to use the CREATE INDEX SQL statement. It’s well-documented and shouldn’t present too much of a challenge for anyone with some SQL experience. Those who don’t feel all that comfortable working with the command line will prefer to use a GUI-based tool like phpMyAdmin.

The steps are as follows:

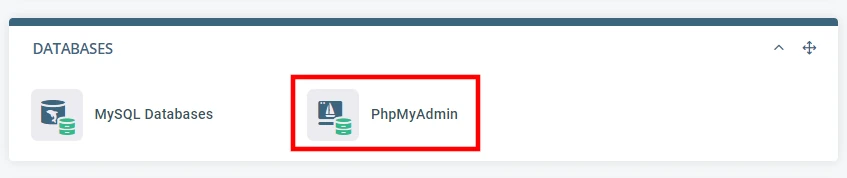

1. Open phpMyAdmin via your control panel. If you use SPanel, you can find the icon under the Databases section on the User Interface’s homepage.

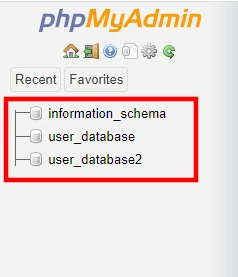

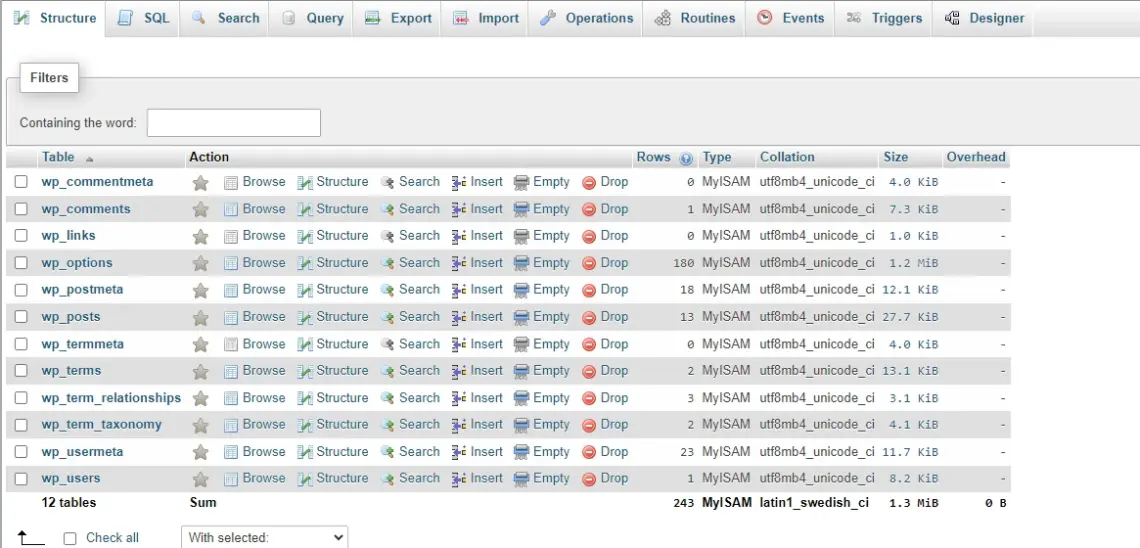

2. Select the database you’d like to modify from the menu on the left.

3. The main screen will now showcase all the tables in the selected database. Click on the one you’d like to index.

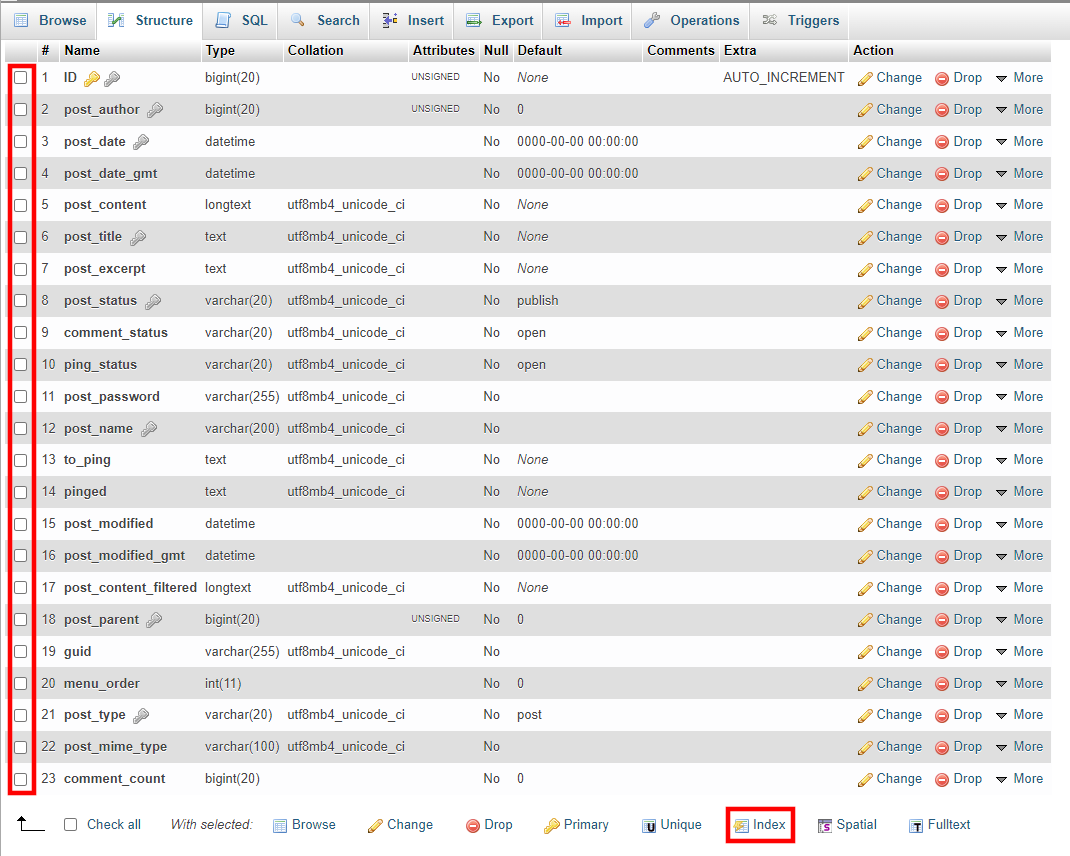

4. Go to the Structure tab. You’ll see all the columns in the table. Use the checkboxes to select the ones you want to add to the index and click the Index button below the list.

If the data is not corrupted, phpMyAdmin should display a message saying the operation is successful.

NOTE: Remember that you’re making changes to the database – one of your website’s main components. Before you try to create an index, it may be a good idea to create a full backup of your site and database in case something goes wrong.

Memory Management and Buffering

Buffering is all about efficiently utilizing the available hardware resources. More specifically, it shows how your database management system uses your server’s RAM to speed up query execution.

Whenever the DBMS has to read or write information from or to the database, it saves a copy of the record into the server’s memory. Then, it receives a request for the same bit of data, and it fetches the copy from the RAM.

Why does it do that?

It’s all because serving data from memory is much faster than doing it from the disk. The speed difference depends on numerous factors, but even when you compare cutting-edge NVMe SSDs to standard-issue RAM, we’re talking about several times faster performance when the data is read from the memory. As you may have guessed, this all has a profound effect on the overall loading speeds and user experience.

Obviously, the server memory is not unlimited, so the database can’t store an infinite volume of data in it. In fact, your database has an allocated volume of memory specifically reserved for caching data. It’s called a buffer, and if your site uses the InnoDB storage engine, its default capacity is 128MB.

This doesn’t sound like a lot, and some of you will be happy to hear you can change it. However, remember that increasing the buffer size won’t necessarily solve all your issues.

This is partly because allocating more RAM to the buffer can leave other components crucial for your site’s performance deprived of the resources they need to run well. It’s also not guaranteed that the size of the buffer is the main performance hindrance.

That’s why, if there is an issue with the database’s buffer, you’ll want to approach the matter more meticulously.

Here are some indicators you may want to pay close attention to:

- The buffer pool hit ratio – this metric shows you what percentage of all queries are responded to by the buffer and what goes through to the disk. By default, the DBMS will process as many queries as possible via the buffer. However, if the request is for a page that isn’t stored in memory, it will be relayed to the disk, and its processing will be much slower.

That’s why keeping the buffer pool hit ratio as high as pбossible is essential. Experts recommend configuring your database so around 90% of all queries are handled by the buffer.

- Page life expectancy – every copy of a record stored in the buffer is deleted after a period of time. The busier the database, the shorter this period is. Monitoring how frequently data records are removed from the buffer will give you the average page life expectancy – a metric that can help you improve your database’s performance.

It’s traditionally accepted that a reasonable page life expectancy hovers around 300 seconds. However, that conclusion was reached back when servers were less powerful. Nowadays, it’s considered normal to have an average page life expectancy of 300 seconds for every 4GB of RAM.

The busier the server, the more frequently pages must be deleted from the buffer. This will push the page life expectancy down and increase the number of requests that can’t be processed by the buffer. Because the disk is so much slower, the overall website performance will inevitably be affected.

- Page reads/sec. – this metric indicates how much data is read from the disk every second. You want to keep it as low as possible because, no matter how quick modern SSDs are, queries handled by the server’s disk are still comparatively slow.

There are many approaches to minimizing the role of the disk in database query execution. You can do it by providing an adequately sized buffer that caches frequently searched data, but you can also ensure the information is properly indexed so the DBMS can find it more quickly.

Managing your database buffer size

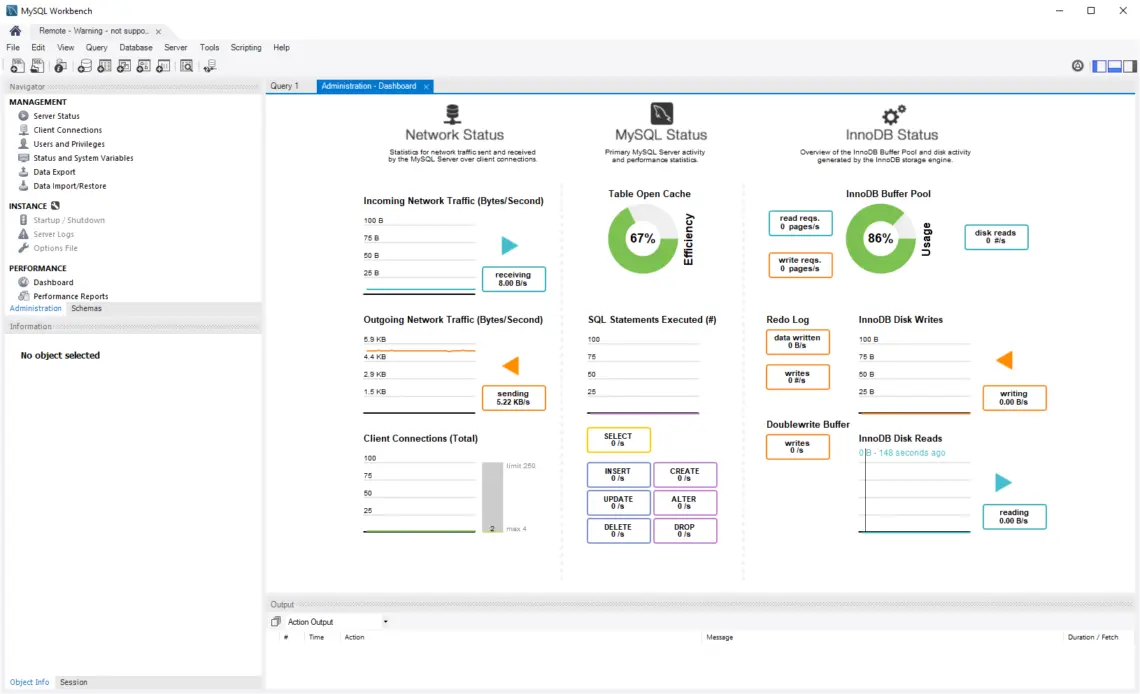

There are many ways to monitor the health of your database’s buffer pool. For example, MySQL Workbench, a free GUI-based database management tool, has a performance dashboard showing all kinds of statistics, including the buffer pool usage, the read/write requests, the disk reads per second, etc.

Changing the buffer’s size is more complicated. It requires modifying MySQL’s primary configuration file – my.cnf. Its precise location depends on the operating system. Most Linux distributions based on Red Hat store it in the /etc/ folder. The variable you need to add or modify depends on the storage engine. If you use InnoDB – it’s innodb_buffer_pool_size. For MyISAM-powered databases, it’s key_buffer_size.

Utilizing Caching Mechanisms

As we discovered in the previous section, your relational database has a built-in mechanism to store information in memory for faster retrieval. In other words, it effectively has a caching system out of the box. In light of this, you may think implementing other caching solutions would be unnecessary.

This is not quite the case.

Most caching solutions focus on static data like images, CSS stylesheets, and JavaScript files, but some advanced systems can also store database-generated data in the server’s RAM to speed up delivery.

They outperform the default buffer pool in many key areas, including:

- The cache storage method – your database’s buffer contains pages of information with multiple table rows and records. Caches built by dedicated solutions tend to be much better organized.

- The data retrieval mechanism – caching solutions rely on complex key/value systems to locate the required data, resulting in much quicker delivery.

- Scalability – dedicated caching solutions are built with projects of all shapes and sizes in mind. As a result, they can support your project and ensure excellent performance even as your site grows.

There are dozens of caching systems, all with their advantages and disadvantages. When it comes to database caching, however, two solutions stand out from the crowd. Let’s have a look at them.

Memcached

Memcached was first released more than two decades ago, and throughout the years, it has become an integral part of many development stacks. It’s popular with website owners because of its advanced versatility. It supports the most popular programming languages and has an easy-to-use API, so configuring your application to work with it should be no problem. A special plugin for MySQL databases makes the setup quick and easy.

Memcached also supports distributed architecture, meaning cached data can be stored on multiple nodes simultaneously. This sounds rather expensive, but thanks to containerization and virtualization solutions like Docker and Kubernetes, it doesn’t need to be.

At the same time, using multiple nodes will not only distribute the load and improve performance but also minimize potential downtime. This is why, in the past, even large social networks like Twitter used Memcached.

Now, though, they’ve also implemented another caching system.

Redis

Initially released in 2009, Redis is similar to Memcached in that it’s open-source and supported by most web hosting providers. Like Memcached, it stores data in the server’s RAM to deliver it more quickly.

Unlike its market competitor, the data Redis writes to memory is persistent – snapshot files and logs restore the information upon startup. This, coupled with its key-value data retrieval system, means that Redis can be used as a fully-featured Non-relational database management system.

If you use an SQL database, Redis can be implemented as a caching solution. It offers support for data types, something Memcached lacks, and the range of languages and applications Redis works with is even more impressive.

Overall, several features make Redis more powerful and flexible than Memcached. However, both solutions can significantly speed up your database, so considering them is a good call.

Disk I/O Optimization

Pretty much all optimization techniques are ultimately aimed at reducing the number of read and write operations the disk needs to perform (disk I/O).

Thanks to indexes, the DBMS doesn’t need to scan an entire table every time a piece of data is required, and with the help of buffering, many of the queries are handled by the server’s random access memory rather than the much slower disk.

However, if the size of your database grows sufficiently, the volume of the data will be too big, and the searches will be too random for the optimization methods above to be effective. The disk I/O will rise despite your best efforts.

Unfortunately, dealing with the issue is not as easy as you think. This is partly because diagnosing the problem requires some technical knowledge and partly because the different DBMSs and storage engines have different aspects of their operations that may affect I/O.

There is no universally supported solution that can lower the number of input and output operations on your disk. However, there are a few techniques that should work in most cases:

Optimizing your tables and indexes

We’ll start with the most basic method for lowering disk overhead. An active database has to deal with thousands of requests that insert, modify, and remove information stored in tables. The more of these requests, the more disorganized the data becomes.

Most DBMSs have built-in mechanisms for automatically rearranging the information to speed up the scans and deliver the correct record more quickly. In the case of MariaDB and MySQL, for example, it’s run with the OPTIMIZE TABLE statement. In the case of PostgreSQL, it’s VACUUM.

It’s recommended to use these utilities periodically as a part of the database’s regular maintenance. If you’re not comfortable working with the command line, tools like phpMyAdmin can offer the functionality through a graphic interface.

Improving your indexes

An index will only boost a database’s performance if it’s properly configured. Extra care won’t go amiss when indexing a large database.

If you include a column in your index that is frequently updated, the DBMS will need to perform more write operations on the disk. This is especially true if you have a database with thousands (or even hundreds of thousands) of rows.

So, as your database grows, make sure you monitor its performance. If it slows down, check the indexes and see if any frequently modified data is being indexed. If it is, it may be a good idea to disable some indexes and see if this will affect the speed.

Partitioning

Partitioning means splitting large database tables into smaller, easier-to-manage parts. The individual partitions can be hosted on different filesystems, meaning the load is more evenly spread, and more resources are available to guarantee quick loading speeds. Furthermore, breaking down the enormous table into smaller chunks enables the DBMS to find the required information more quickly and improves the database’s overall performance.

Setting the correct checkpoints

A checkpoint tells the DBMS to copy any modified data from the memory and save it for permanent storage on the server’s HDD or SSD. Having regular checkpoints ensures that the modifications you make to your database will be saved, and no data will be lost.

However, every checkpoint inevitably generates activity on the disk. There are ways to moderate this activity.

You can set your checkpoints during less busy periods for your site to avoid unwanted I/O spikes. Furthermore, checkpoints have a timeout feature that aborts the write operation if it’s taking too long. This can relieve some of the pressure on your server’s primary storage device during heavy load.

Database Schema Design

The schema defines the crucial elements in your database (tables, rows, columns, indexes, and data entries) and the relations between them. If you liken the database to a machine, the schema will be the blueprint showing the server how the individual components were assembled and how they work.

Needless to say, if the schema is poorly designed, it will be difficult to be understood by both database administrators and software applications. Crucially, it will make data retrieval trickier and slow down query execution times. So, the design of your schema is essential.

But how do you pick the right one?

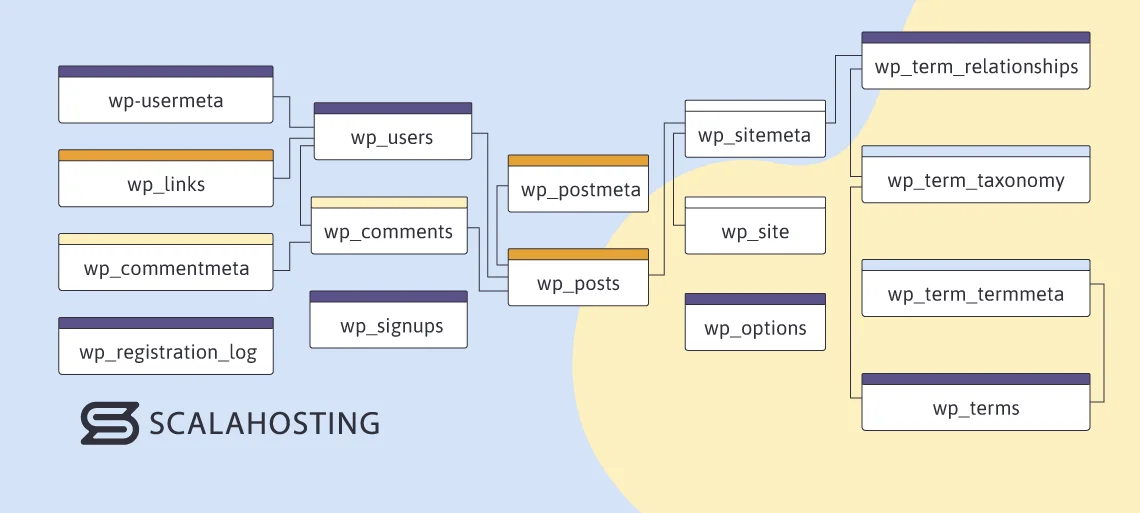

First, you start with an entity relationship diagram (or ERD). An ERD is a flowchart showing you how elements are related to each other within a system. In our context, the elements are the tables, columns, rows, and entries, and the system is the database.

It’s not technically a part of the database. It’s a visual representation used by database administrators to determine how their data will be organized. Below, you can see an ERD of a typical WordPress database.

When you start drawing your ERD, you’ll find dozens of models applicable to different types of databases.

For example, the hierarchical model is a tree-like structure with clear parent-child relations between individual entries. If data entries have a one-to-many relationship, this is the model for you.

The network model introduces the notion of sets. It doesn’t stick to a rigid parent-child structure and is suitable if your data organization is based on many-to-many relationships. The downside is that implementing and maintaining the network model is trickier.

The choice of a model for your ERD and schema depends on numerous factors, including:

- The volume of the data stored in the database.

- The volume of data being queried at any given time.

- The frequency with which data is modified.

- The type of data you need to process and store.

- The performance benchmarks you’ve set yourself.

- The scalability options you want to have.

With the model chosen and the schema design clear, it’s time to create it. There are multiple ways to set up a new schema. Traditionalists will prefer to write the required statements in an SQL file, upload it to the server, and run it through the command line.

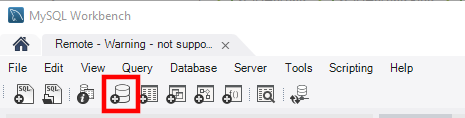

For those looking for a more novice-friendly option, doing it via a tool like MySQL Workbench will probably be a better choice. After establishing a connection to the database server, you’ll see the button in the main toolbar.

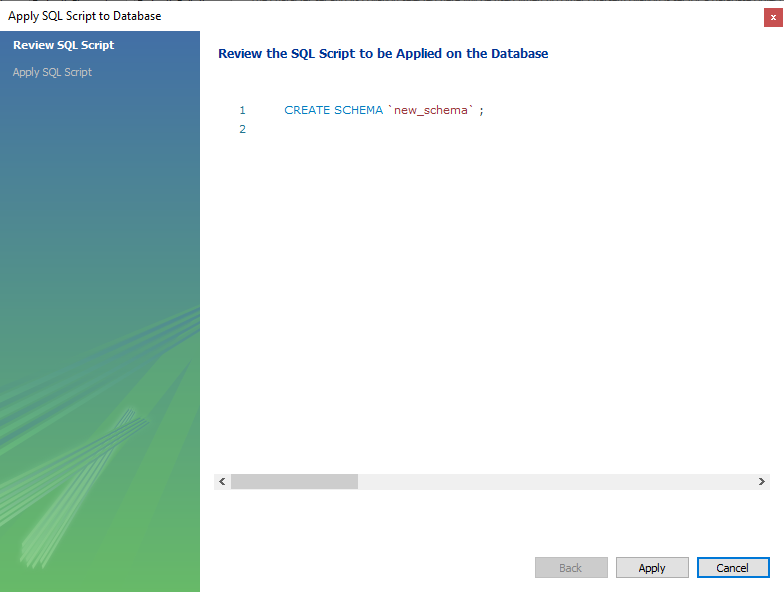

You’ll be asked to enter the name of the new schema and the default character set and collation. After you click Apply, MySQL Workbench will open the SQL editor, where you can enter the required statements.

While designing your database, you should focus on several things that can result in faster speeds and easier management. Here are some of them:

- Easy-to-understand naming conventions – there are a few rules of thumb that you want to follow here. When naming tables and columns, use full words instead of acronyms and separate them with underscores. Shorten table and column names whenever possible, and make sure you don’t use reserved words if you don’t want to get syntax errors.

- Use the correct data type – your relational database can store all sorts of information. However, if you want it to be as efficient as possible, you must set the correct data type for every table and every record you save.

For example, a column that will store users’ dates of birth will need to use the DATE type. If it’s reserved for the exact date and time when they created their accounts, it’ll have to use the DATETIME type.

If you don’t set the correct data types, your database will use too much disk space, and query execution will be comparatively slow.

- Stick to normalization best practices – normalization is a database design principle that eliminates data redundancy, streamlines your db structure, and slashes significant chunks of your query execution times. There are no fewer than seven different normalization forms that can help you with that. They’re rarely used at the same time, but researching through all of them is worth the effort.

Scaling Database Resources

Scaling means adding more hardware resources to your hosting infrastructure to speed up your website.

The additional memory, processing power, and storage can indeed eliminate quite a few bottlenecks, but upgrading your account shouldn’t be your first port of call. In fact, before you resort to it, you have to ensure you have a fully optimized database with all the speed-enhancing techniques already implemented. Failing to do that can affect your project in not one but two ways.

First, it’s the question of whether you need a hardware upgrade at all. An optimized database can perform well and execute more queries on less powerful hardware than a poorly organized one running on top-notch machinery. Furthermore, as your database grows bigger and bigger, the lack of any optimization will become more apparent, and even the scaled-up resources won’t be good enough to guarantee quick loading speeds.

Second, the most effective scaling techniques usually make the database setup more convoluted and trickier to maintain. You don’t want to deal with the extra complexity unless you really need to.

So, scaling isn’t a shortcut that eliminates the need to improve your database design and operation. However, it’s often the best way to ensure queries are executed quickly and efficiently.

There are two types of database scaling – vertical and horizontal. Let’s explore the concepts in a bit more detail.

- Vertical database scaling – vertical scaling basically means upgrading your server’s hardware. How you’re going to approach this depends on the type of hosting service you use. If you’re using a dedicated server, you have to either shut down the machine and swap the physical components or migrate the entire website to a more powerful server.

No such thing is required if you use a cloud VPS. Thanks to virtualization, your virtual server is infinitely scalable. You can add more CPU cores, upgrade the RAM, or expand the storage capacity with a few clicks. The upgrade rarely needs anything more than a quick reboot, so it won’t affect your uptime stats too badly.

- Horizontal database scaling – horizontal database scaling is also referred to as sharding and is reserved mainly for large databases with tables with thousands of rows. It involves splitting a large table into two or more smaller ones and storing them on separate nodes. These nodes could be individual machines, or they could be virtual instances of Docker containers.

But how does doing that affect your database performance?

First, when the DBMS has to find a particular record, instead of going through an enormous table with thousands upon thousands of rows and columns, it will scan a much smaller subset of information. This reduces the load on the server, which helps with loading speeds. Disk I/O also shrinks, so data is delivered more quickly.

Data availability has improved, as well. If one of the nodes goes down, users can still access the information stored in the parts of the sharded table that remain unaffected.

But how is a table split into two?

Once again, there are two approaches – horizontal and vertical.

To illustrate the difference, we’ll take a simple table with four rows and five columns. It contains, let’s say, the details of registered users, and it looks like this:

| ID | First name | Last name | Date of birth | Place of birth |

|---|---|---|---|---|

| 001 | John | Williams | 25.09.1990 | New York |

| 002 | Jane | Smith | 30.04.1989 | San Diego |

| 003 | William | Jones | 05.06.1994 | Dallas |

| 004 | Sam | Turner | 04.03.1992 | Boston |

Splitting the page horizontally literally means drawing a horizontal line through it. We end up with two tables that look like this:

| ID | First name | Last name | Date of birth | Place of birth |

|---|---|---|---|---|

| 001 | John | Williams | 25.09.1990 | New York |

| 002 | Jane | Smith | 30.04.1989 | San Diego |

And this:

| ID | First name | Last name | Date of birth | Place of birth |

|---|---|---|---|---|

| 003 | William | Jones | 05.06.1994 | Dallas |

| 004 | Sam | Turner | 04.03.1992 | Boston |

These two tables can be situated on separate nodes. You have probably guessed the logic behind vertical splitting. We’re splitting the columns into two tables, which we’ll place on separate servers. However, because we need to associate the data from one table with the data in the other, we need to use the ID column as an identifier.

The result is:

| ID | First name | Last name |

|---|---|---|

| 001 | John | Williams |

| 002 | Jane | Smith |

| 003 | William | Jones |

| 004 | Sam | Turner |

And:

| ID | Date of birth | Place of birth |

|---|---|---|

| 001 | 25.09.1990 | New York |

| 002 | 30.04.1989 | San Diego |

| 003 | 05.06.1994 | Dallas |

| 004 | 04.03.1992 | Boston |

The idea is that the DBMS has far fewer records to scan, and it can find the needed information much quicker.

Conclusion

The task of speeding up a database sounds daunting, and, as you can see from our extensive report above, the process can sometimes be pretty complicated. Far too many factors play a role in determining the performance, and fixing them all could be a challenge for a single website owner.

The good news is that if you use a managed VPS service, you can rely on experts to consider these factors for you. The DBMS is a part of the stack that you get out of the box, and it’s usually configured to work with the most popular applications and perform at its best.

However, this doesn’t mean you shouldn’t know how it works or what you can do to improve its speed. The in-depth report above should give you all the information you need.

FAQ

Q: How do I check my VPS’s performance?

A: If your server’s performance is not up to scratch, you will likely notice it while browsing your pages. That said, it’s best to rely on one of the many online page speed test tools. Use them regularly, and you can set a benchmark for how quick your website should be. When it deviates from that benchmark, you’ll be able to spot the difference before it becomes noticeable to the user.

Q: How do you optimize your database performance?

A: Learning more about how your database works will help you understand what needs to be done to speed it up. It will help you learn how to implement techniques like indexing and caching and show you how they help boost performance.

Q: What is the goal of optimizing database performance?

A: A lot of what you see on your screen when browsing a typical website is stored in the database. The quicker this data is delivered, the quicker your page will be. And the quicker the page, the better the user experience – something crucial for every online project.